· Rajasekhar Gundala · ELK · 7 min read

How to deploy Elasticsearch 8.8.0 in docker swarm behind Caddy v2.6.4

Elasticsearch is a Free and Open, Distributed, RESTful Search Engine. It is used as a search backend and also to store and manage logs, metrics, etc

In this post, I am going to show you how to deploy Elasticsearch 8.8.0 in our Docker Swarm Cluster using the Docker Compose tool behind Caddy 2.6.4

Elasticsearch is a free and open-source, distributed, RESTful Search Engine written in Java.

Elasticsearch is used as a search backend and also to store and manage logs, metrics, etc

If you want to learn more about Elasticsearch, please go through the below links.

Elasticsearch website

Official documentation

GitHub repository

Let’s start with actual deployment…

Prerequisites

Please make sure you should fulfill the below requirements before proceeding to the actual deployment.

Docker Swarm Cluster with GlusterFS as persistent tool.

Caddy as reverse proxy to expose micro-services to external.

Introduction

Elasticsearch is the distributed, RESTful search and analytics engine at the heart of the Elastic Stack.

Elasticsearch Features

To learn more about Elasticsearch features and capabilities, see official page

Persist Elasticsearch Data

Containers are fast to deploy and make efficient use of system resources. Developers get application portability and programmable image management and the operations team gets standard run time units of deployment and management.

With all the known benefits of containers, there is one common misperception that the containers are ephemeral, which means if we restart the container or in case of any issues with it, we lose all the data for that particular container. They are only good for stateless micro-service applications and that it’s not possible to containerize stateful applications.

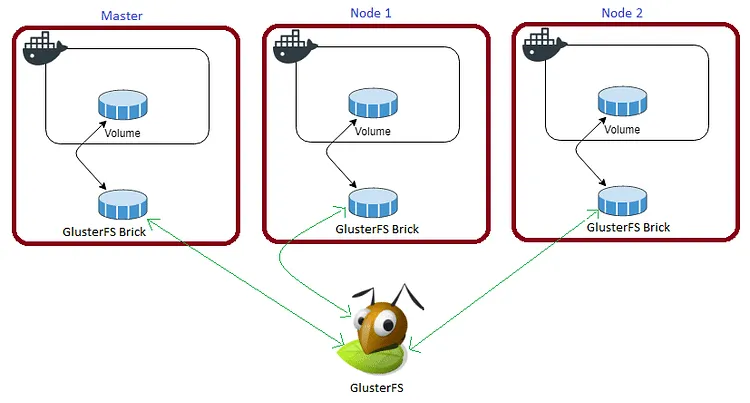

I am going to use GlusterFS to overcome the ephemeral behavior of Containers.

I already set up a replicated GlusterFS volume to have data replicated throughout the cluster if I would like to have some persistent data.

The below diagram explains how the replicated volume works.

Volume will be mounted on all the nodes, and when a file is written to the

/mntpartition, data will be replicated to all the nodes in the Cluster

In case of any one of the nodes fails, the application automatically starts on other node without loosing any data and that’s the beauty of the replicated volume.

Persistent application state or data needs to survive application restarts and outages. We are storing the data or state in GlusterFS and had periodic backups performed on it.

Elasticsearch will be available if something goes wrong with any of the nodes on our Docker Swarm Cluster. The data will be available to all the nodes in the cluster because of GlusterFS Replicated Volume.

I am going to create 3 folders config, elasticsearch, and kibana folders in /mnt directory to store configuration data, Elasticsearch data and Kidana data for data availability through out the cluster.

cd /mnt

sudo mkdir -p config

sudo mkdir -p elasticsearch

sudo mkdir -p kibana

Please watch the below video for Glusterfs Installation

Prepare Elasticsearch Environment

I am going to use docker-compose to prepare the environment file for deploying Mattermost. The compose file is known as YAML ( YAML stands for Yet Another Markup Language) and has extension .yml or .yaml

I am going to create application folders in

/optdirectory on manager node in our docker swarm cluster to store configuration files, nothing but docker compose files (.yml or .yaml).

Also, I am going to use the caddy overlay network created in the previous Caddy post.

Now it’s time to create a folder elastic, in /opt directory to place configuration file, i.e., .yml file for Elasticsearch.

Use the below commands to create the folder.

Go to /opt directory by typing cd /opt in Ubuntu console

make a folder, elastic in /opt with sudo mkdir -p elastic

Let’s get into elastic folder by typing cd elastic

Now create a docker-compose file inside the elastic folder using sudo touch elastic.yml

Open elastic.yml docker-compose file with nano editor using sudo nano elastic.yml and copy and paste the below code in it.

Elasticsearch Docker Compose

Here is the docker-compose file for Elasticsearch.

version: '3.7'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.0

volumes:

- /mnt/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /mnt/elasticsearch:/usr/share/elasticsearch/data

ports:

- "9200:9200"

- "9300:9300"

environment:

- "node.name=es-node"

- "discovery.type=single-node"

- "bootstrap.memory_lock=true"

- "ELASTIC_PASSWORD=secret-password"

- "http.port=9200"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

networks:

- caddy

ulimits:

memlock:

soft: -1

hard: -1

deploy:

placement:

constraints: [node.role == worker]

replicas: 1

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

kibana:

image: docker.elastic.co/kibana/kibana:8.6.2

depends_on:

- elasticsearch

volumes:

- /mnt/config/kibana.yml:/usr/share/kibana/config/kibana.yml

- /mnt/kibana:/usr/share/elasticsearch/data

ports:

- "5601:5601"

environment:

- KIBANA_SYSTEM_PASSWORD=secret-password

networks:

- caddy

deploy:

placement:

constraints: [node.role == worker]

replicas: 1

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

volumes:

config:

driver: "local"

elasticsearch:

driver: "local"

kibana:

driver: "local"

networks:

caddy:

external: true

Caddyfile – Elasticsearch

The Caddyfile is a convenient Caddy configuration format for humans.

Caddyfile is easy to write, easy to understand, and expressive enough for most use cases.

Please find Production-ready Caddyfile for Elasticsearch.

Learn more about Caddyfile here to get familiar with it.

{

email you@example.com

default_sni elastic

cert_issuer acme

# Production acme directory

acme_ca https://acme-v02.api.letsencrypt.org/directory

# Staging acme directory

#acme_ca https://acme-staging-v02.api.letsencrypt.org/directory

servers {

metrics

protocols h1 h2c h3

strict_sni_host on

trusted_proxies cloudflare {

interval 12h

timeout 15s

}

}

}

elasticsearch.example.com {

log {

output file /var/log/caddy/elasticsearch.log {

roll_size 20mb

roll_keep 2

roll_keep_for 6h

}

format console

level error

}

encode gzip zstd

reverse_proxy elasticsearch:9200

}

kibana.example.com {

log {

output file /var/log/caddy/kibana.log {

roll_size 20mb

roll_keep 2

roll_keep_for 6h

}

format console

level error

}

encode gzip zstd

reverse_proxy kibana:5601

}

Please go to Caddy Post to get more insight to deploy it in the docker swarm cluster.

Final Elasticsearch Docker Compose (Including caddy server configuration)

Please find the full docker-compose file below.

If you are not familiar with Caddy Reverse proxy, I already wrote an article Caddy in Docker Swarm. Please go through if you want to learn more.

version: "3.7"

services:

caddy:

image: tuneitme/caddy

ports:

- target: 80

published: 80

mode: host

- target: 443

published: 443

mode: host

- target: 443

published: 443

mode: host

protocol: udp

networks:

- caddy

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile

- /mnt/caddydata:/data

- /mnt/caddyconfig:/config

- /mnt/caddylogs:/var/log/caddy

deploy:

placement:

constraints:

- node.role == manager

replicas: 1

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.8.0

volumes:

- /mnt/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /mnt/elasticsearch:/usr/share/elasticsearch/data

ports:

- "9200:9200"

- "9300:9300"

environment:

- "node.name=es-node"

- "discovery.type=single-node"

- "bootstrap.memory_lock=true"

- "ELASTIC_PASSWORD=secret-password"

- "http.port=9200"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

networks:

- caddy

ulimits:

memlock:

soft: -1

hard: -1

deploy:

placement:

constraints: [node.role == worker]

replicas: 1

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

kibana:

image: docker.elastic.co/kibana/kibana:8.8.0

depends_on:

- elasticsearch

volumes:

- /mnt/config/kibana.yml:/usr/share/kibana/config/kibana.yml

- /mnt/kibana:/usr/share/elasticsearch/data

ports:

- "5601:5601"

environment:

- KIBANA_SYSTEM_PASSWORD=secret-password

networks:

- caddy

deploy:

placement:

constraints: [node.role == worker]

replicas: 1

update_config:

parallelism: 2

delay: 10s

restart_policy:

condition: on-failure

volumes:

caddydata:

driver: "local"

caddyconfig:

driver: "local"

caddylogs:

driver: "local"

config:

driver: "local"

elasticsearch:

driver: "local"

kibana:

driver: "local"

networks:

caddy:

external: true

Here I used a custom Caddy docker container with plugins, like Cloudflare DNS, Caddy Auth Portal etc…

Please find the custom caddy docker image below.

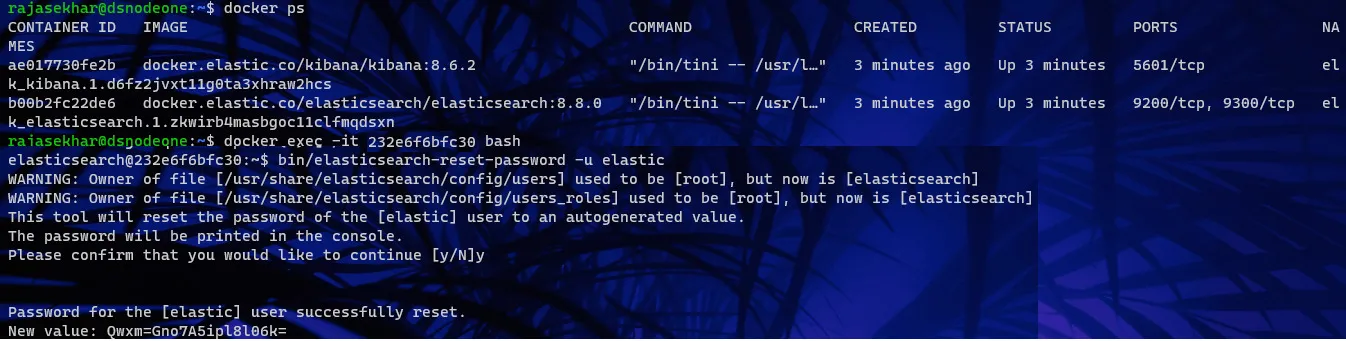

Deploy Elasticsearch Stack using Docker Compose

Now it’s time to deploy our docker-compose file above, elastic.yml using the below command

docker stack deploy --compose-file elastic.yml elastic

In the above command, you have to replace elastic.yml with your docker-compose file name and elastic with whatever name you want to call this particular application.

With docker compose in docker swarm what ever we are deploying is called as docker stack and it has multiple services in it as per the requirement.

As mentioned earlier I named my docker-compose as elastic.yml and named my application stack as elastic

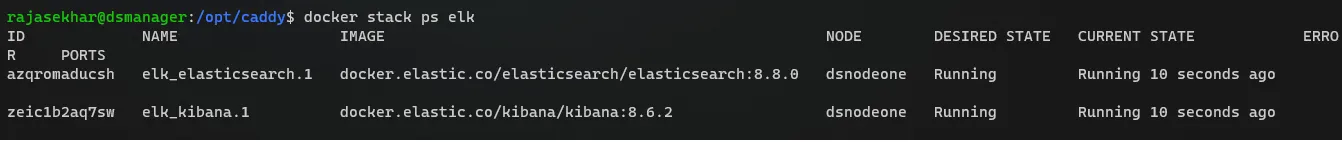

Check the status of the stack by using docker stack ps elastic

Check elastic service logs using docker service logs elastic_elasticsearch

Also check kibana service logs using docker service logs elastic_kibana

One thing we observe is that it automatically re-directs to https with Letsencrypt generated certificate. The information is stored in /data a directory.

I will be using this

caddy stackas a reverse proxy / load balancer for the applications I am going to deploy to Docker Swarm Cluster.

Also I use docker network

caddyto access the applications externally.

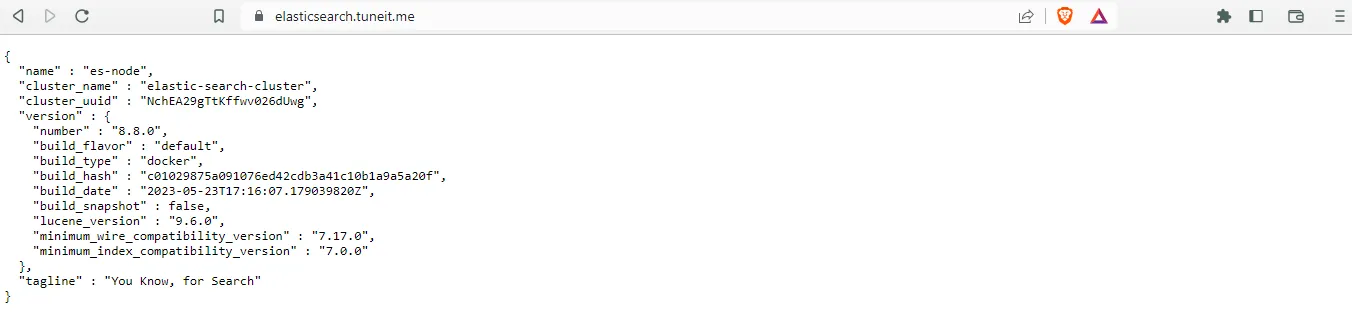

Access Elasticsearch and Kibana

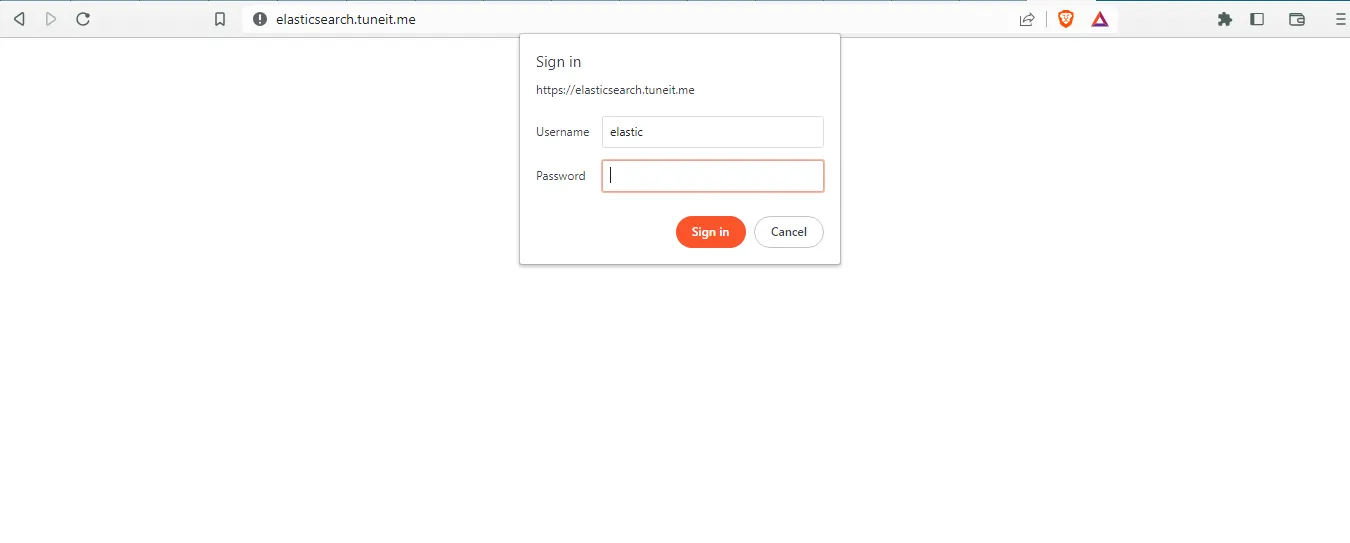

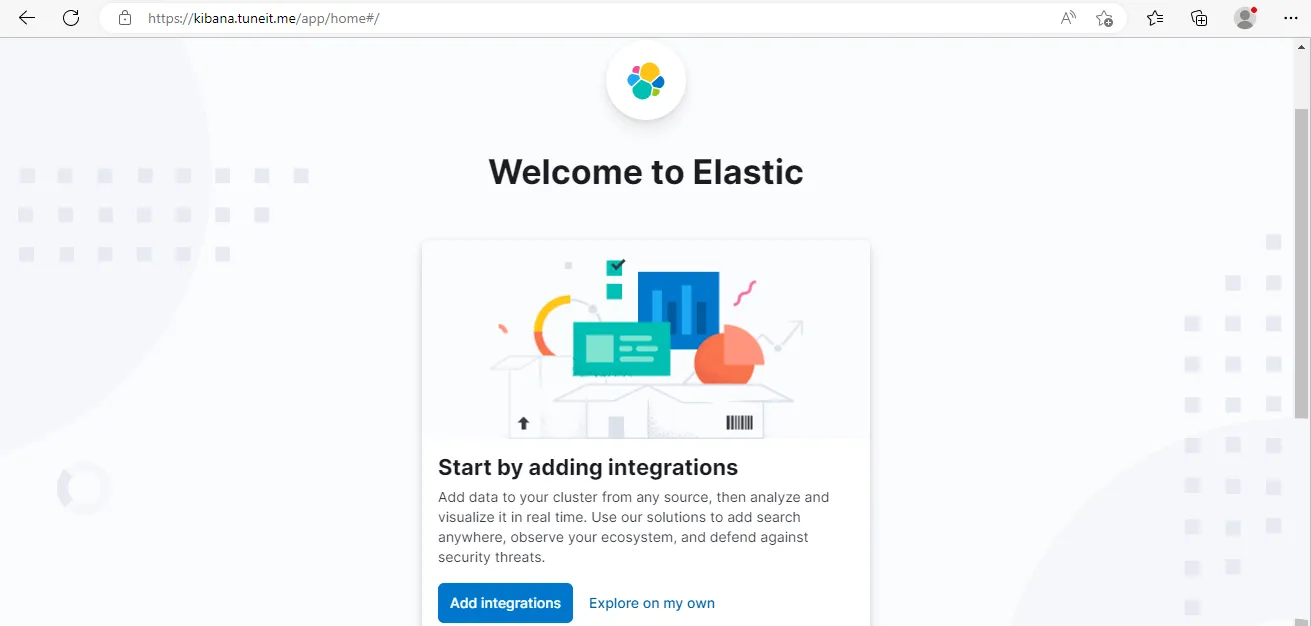

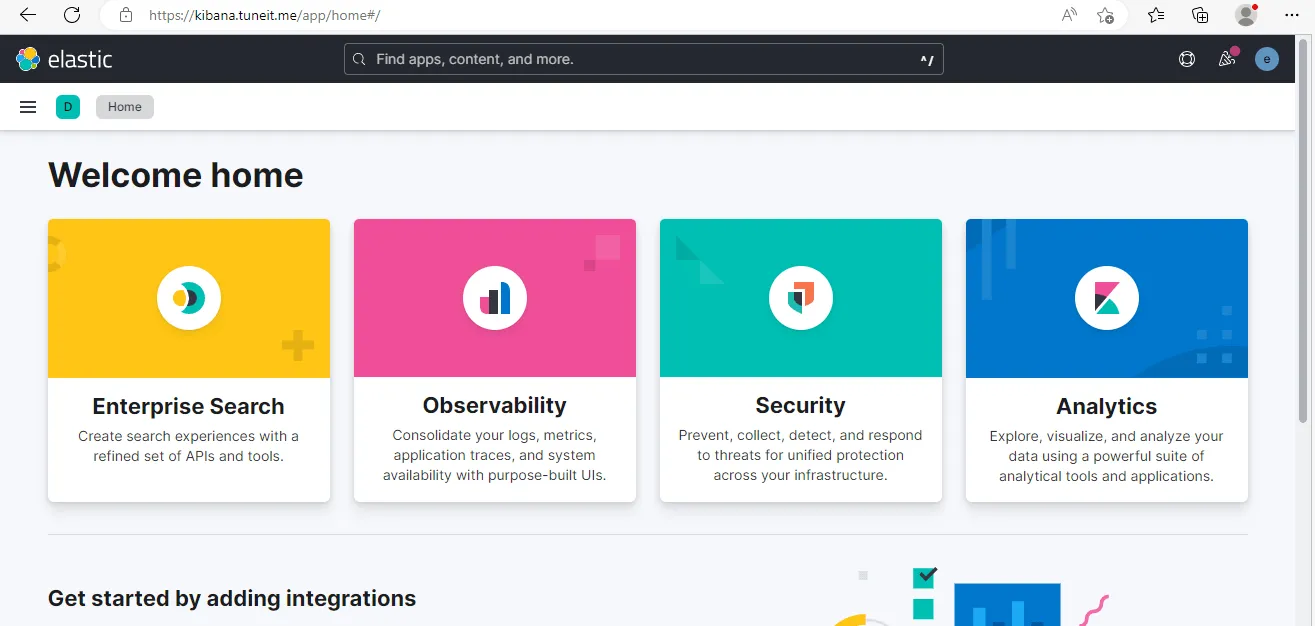

Now open any browser and type elasticsearch.example.com to access the Elasticsearch. it will automatically be redirected to https://elasticsearch.example.com ( Be sure to replace example.com with your actual domain name).

Access Kibana using kibana.example.com. Like mentioned above it will automatically redirects https://kibana.example.com by the Caddy reverse proxy.`

Make sure that you have DNS entry for your applications (

elasticsearch.example.comandkibana.example.com) in your DNS Management Application.

Please find below images for your reference.

Deployment of Elasticsearch behind Caddy in our Docker Swarm is successful

If you enjoyed this tutorial, please give your input/thought on it by commenting below. It would help me to bring more articles that focus on Open Source to self-host.

Stay tuned for other deployments in coming posts… 🙄